The JOS kernel is an already partially implemented kernel that students of MIT course 6.828 finish implementing in a series of labs. I am not an MIT student, however I was still able to complete the course as it is made fully available online for all to benefit. In this article, I will explain how the JOS kernel memory management subsystem works, providing examples from my implementation, which can be found on my github.

Disclaimer: Although I will do my best to provide background information about the x86 architecture, there is no way around reading the manual (note that the 80386 manual has everything you need, and is pretty thin compared to the recent manuals). But I don’t expect you to go off and read the entire manual as a prerequisite to this article; I will simply point to the right sections which will serve as supplementary information.

Whenever you’re dealing with memory management, there are typically two main things you need to worry about: physical pages management, and virtual memory. The typical Operating Systems 101 course will only introduce you to virtual memory; however, you can’t fully understand virtual memory unless you know how to manage physical pages. We’ll go in depth into each one, starting with physical pages management.

Physical Pages Management

Conventional operating systems are great at abstracting memory away from the

application programmer to give the illusion that there is an infinite amount

available (I say conventional because there are other designs possible - see

exokernels). Of course, malloc()

can return NULL which means “out of memory”, but you get the point. However,

when you’re implementing the operating system, you’re the one who has to provide

that illusion, and there isn’t actually an infinite amount of memory

available. Welcome to physical page management.

So the first question to ask is: how do I know how much physical memory is available? The PC platform being the historical mess that it is, there isn’t one clean, API-like way to query the hardware and get a clean “2GB” answer. To quote the book about another educational operating system written by the instructors of the same course, the xv6:

On the x86, there are at least three common algorithms [to determine the amount of memory available]: the first is to probe the physical address space looking for regions that behave like memory, preserving the values written to them; the second is to read the number of kilobytes of memory out of a known 16-bit location in the PC’s non-volatile RAM; and the third is to look in BIOS memory for a memory layout table left as part of the multiprocessor tables. Reading the memory layout table is complicated.

To avoid complications, the JOS kernel simply assumes that there is 256MB of memory. So, now that we know how much memory we have, how do are we going to manage it? To put it in big picture terms, we keep track in some kernel data structure which chunks of memory are currently in use, and which are not. To make our lives easier (and to work with the MMU, which we’ll introduce in the next section), we’ll think of memory in 4Kb chunks, which we call pages. Every page will be marked as either in use, or not in use. For example, in our data structure, we’ll have that page 0 (bytes [0, 4096[) is in use, page 1 (bytes [4096, 8192[) isn’t, and so on.

Let’s examine the specific implementation of the JOS kernel.

// kern/pmap.c

struct PageInfo *pages; // Physical page state array

We keep track of all the memory pages in a global array pages. For each

physical page, there is a corresponding struct PageInfo in this array (i.e.

pages[0] refers to page 0, pages[1] refers to page 1, and so on). Note that

the pages array is a C pointer only because we dynamically allocate it

instead of statically allocating it.

// inc/memlayout.h

struct PageInfo {

// Next page on the free list.

struct PageInfo *pp_link;

// pp_ref is the count of pointers (usually in page table entries)

// to this page, for pages allocated using page_alloc.

uint16_t pp_ref;

};

A struct PageInfo keeps track of two things: a free list link, and a reference

count. The convention is as follows: if the reference count is 0, then the page

is free and is on the free list. But why do we keep a reference count; wouldn’t a

flag is_in_use be enough? To answer this question, we’ll need to understand

virtual memory, so we’ll come back to it.

// kern/pmap.c

static struct PageInfo *page_free_list; // Free list of physical pages

Along with the pages array, we have a pointer page_free_list that points

into the pages array at the first free page. The rest of the free list is

traversed using the pages’ pp_link pointer.

So in general, how do we use the pages array? Well, if you want to know if

page n is in use, you access pages[n]. Or, if you just want a free page, you

take the one pointed to by page_free_list (and update page_free_list to

point to the subsequent item on the list).

Yes, it’s that simple. To convince you that there isn’t more to it, let’s look at the function that other subsystems can use to allocate a page:

// kern/pmap.c

struct PageInfo *

page_alloc(int alloc_flags)

{

struct PageInfo *alloced_page;

// Are we out of free pages?

if (page_free_list == NULL)

return NULL;

// Take the first page off the list and update page_free_list

alloced_page = page_free_list;

page_free_list = page_free_list->pp_link;

alloced_page->pp_link = NULL;

// If the caller wants it zero'ed out, zero it out.

// Note: the page2kva trick has to do with virtual memory, so don't

// worry about it

if (alloc_flags & ALLOC_ZERO) {

memset(page2kva(alloced_page), 0, PGSIZE);

}

// Update the number of allocated pages (just for statistics' sake)

num_page_alloced++;

// Return the page

return alloced_page;

}

That’s all that there is to it! We take all the available memory, divide it up into 4Kb chunks, and keep track of which pages are in use and which aren’t, giving out pages to other subsystems on demand.

Virtual Memory

Virtual memory is probably the hardest thing to understand about memory management, for one reason: it is misunderstood by most people who teach it. The first hit I get when Googling “what is virtual memory?” gives me:

Virtual memory is a memory management capability of an OS that uses hardware and software to allow a computer to compensate for physical memory shortages by temporarily transferring data from random access memory (RAM) to disk storage.

This is WRONG. That is NOT what virtual memory is. The trick described here is one of the things that is enabled by the virtual memory abstraction, but it definitely isn’t virtual memory. That being said, here is my humble attempt to explain what virtual memory really is.

Virtual memory is the decoupling between the addresses stored in a program and

physical addresses. I’ll let that one sink in… So what do I mean by that?

Well, when your CPU is instructed by your program to, for example, store 0 at

address 0xFF203E76, it doesn’t go to address 0xFF203E76 directly. Instead,

it sends 0xFF203E76 through the MMU (the hardware component that manages

virtual memory) to get the physical address to which 0xFF203E76 is mapped -

say 0x00033223. 0x00033223 is where it will store the 0.

The neat thing is that the MMU relies on data structures of a predefined format set up by the operating system to perform this translation… In other words, the operating system decides which (virtual) addresses a program can access, and where these (virtual) addresses map to in (physical) memory. This is also known as an address space.

So what’s the relation between the “transferring data from RAM to disk storage” trick everyone thinks virtual memory is and actual virtual memory? Let me first go into detail into how operating systems set up virtual memory on the x86 architecture, and then I’ll be able to give a more detailed answer.

Virtual Memory on the x86 architecture

For more information, refer to the manual.

As mentioned above, the MMU is a hardware component. Therefore, the operating system must conform to its interface that is literally set in stone (or, in silicon, to be more precise). One of the components of its interface is how it thinks about addresses.

Remember how we divided our physical memory into 4Kb pages? Well, the MMU does

the same. However, instead of indexing the whole memory with one big array like

we did with the pages array, it uses 1024 page tables that each track 1024

pages, and a page directory that tracks all the page tables (for a total of 1024

* 1024 = 1,048,576 pages, the maximum amount addressable by a 32-bit

address). For example, to access page 0, you would access page table 0 in the

page directory at index 0, and then page 0 in that page table at index 0.

Addresses are then divided as shown in the image above: the last 10 bits serve as an index into the page directory, the 10 bits before that serve as an index into the page table, and finally the first 12 bits serve as an index into the actual page. This process is shown here:

Remember how I said that the operating system controls the MMU by carefully setting up data structures of a predefined format? The data structures in question are the page directory and the page tables. The format of a page directory or page table entry is also well defined by the hardware, as we’ll see shortly. Now assume that the operating system has just finished setting up a new address space (i.e. a page directory and page tables are set up and sitting in memory). How do we tell the MMU where our page directory is so that the MMU can start using our new page directory to resolve virtual addresses? Well, we need to load the (physical) address of our page directory into a system register called CR3 (as seen in the bottom left corner of the image above). As soon as we do that, subsequent addresses are translated using this new page directory.

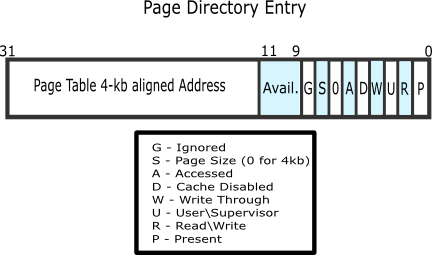

Finally, what does a page directory/page table entry look like? It’s basically an address to the page table or to a page of memory, respectively, along with a bunch of flags.

Source: http://wiki.osdev.org/Paging

Source: http://wiki.osdev.org/Paging

And voilà: that’s all there is to virtual memory. Programs are compiled to instructions that reference virtual addresses. Whenever the CPU needs to access these addresses, it sends the address through the MMU which traverses the page directory and page table structures that were carefully set up by the operating system to find the corresponding physical page to which the address refers. The CPU then performs its memory access there.

The Wrong Virtual Memory Definition Revisited

Now, what’s that “virtual memory extends physical RAM onto the disk” trick everyone is talking about? When the CPU sends a virtual address through the MMU while executing a given user program, the MMU indexes into the page directory or the subsequent page table as we’ve just seen. However, if the Present bit of the page table or page directory entry (see the flags in the image above) is turned off, the CPU will throw an exception, transferring control back to the operating system. The operating system then looks in the CR2 special register to find the address that the user program failed to access; if the address is inside a page that the operating system had previously moved to disk due to heavy memory pressure, then the operating system will:

- Allocate a new physical page (with

page_alloc()). - Copy the contents of the page stored on disk into this newly allocated page.

- Modify the page table entry to point to this newly allocated page.

- Transfer control back to the user program, which will repeat the same instruction, but this time successfully accessing the physical memory page.

Note how this trick is only possible because of the level of indirection provided by virtual memory. If the CPU accessed physical memory directly, there would be no way for the operating system to copy the contents of a memory page to disk in order to reuse the memory page for something else, and bring back the contents of the original memory page only when the program needs it.

Virtual memory in the JOS kernel

The goal of this section is only to give a sense of how a virtual memory-related

functions are implemented in the JOS kernel. We’ll take as an example

page_insert(), which is used to insert a physical page into a page

directory/page table structure.

// kern/pmap.c

int

page_insert(pde_t *pgdir, struct PageInfo *pp, void *va, int perm)

{

pte_t *pte;

// 1.

pte = pgdir_walk(pgdir, va, 1);

if (pte == NULL)

return -E_NO_MEM;

// 2.

pp->pp_ref++;

// 3.

page_remove(pgdir, va);

// 4.

*pte = page2pa(pp) | perm | PTE_P;

return 0;

}

page_insert() takes four arguments: pgdir a pointer to a page directory in

memory, pp a pointer to a struct PageInfo which corresponds to the physical page

that is to be inserted, va a virtual address to which the physical page is to be

mapped, and perm a set of flags (which we won’t worry about). Let’s walk through the

code.

-

We use the convenient function

pgdir_walk()to give us a pointer to the page table entry for the page in whichvapoints (See Figure 5.9).pgdir_walk()essentially traverses the page directory and page tables in software the same way that the MMU does it in hardware. The third argument,1, instructspgdir_walk()to create a new empty page table if it doesn’t exist yet. Thus, if it needs to allocate a new page for a page table but there is no memory pages left, it will returnNULL. -

We increment the reference count of the physical page.

-

We remove any physical page that would already be mapped at

va. That is to say, if the Present bit is set in the page table entry forva, we check the address stored in the entry (see “Page Directory Entry” image above), decrement the reference count of the physical page pointed to by the address in the entry, and unset the Present bit in the page table entry. The net effect is that any mapped physical page is removed from the address space. -

Finally, we rewrite the page table entry with

pp’s physical address, and the right set of flags.page2pa()is a tiny function which calculates the physical address of a givenstruct PageInfobased on its offset from the beginning of thestruct PageInfoarraypages. It is defined inkern/pmap.h.

Remember when we asked why we used a reference count for physical pages instead

of some is_in_use flag? That is because virtual memory allows you to map two

different virtual pages to the same physical page (i.e. two different page

table entries contain the same address field). This is known as a memory

mapping. Therefore, we need to wait for any mapping to the same physical page

from all the page tables to be removed until we can safely declare the physical

page to be “not in use”. To do that, every time we map the physical page in a

page table, we increase the reference count, and every time we remove the

mapping from a page table, we decrease the count. Whenever the count hits 0, the

physical page is considered “not in use”.

Final thoughts

I realize that there’s a few textbooks’ worth of information crammed up in this one article. I don’t expect anyone without any prior operating systems knowledge to read this and understand everything. The purpose of the article was to give a sense of what is involved in implementing a basic operating system. I sometimes read questions on Quora: “Do you need to know assembly to understand how operating systems work?”. My answer is: if you want to be serious about it then yes, you certainly do. And not only do you need to know assembly, but you also need to understand the low level details of the architecture you’re working on (e.g. how the MMU works with the page directory and page tables), how programs are stored in binary format (e.g. ELF format), how they are linked, etc. This might sound like a heavy-duty laundry list, but it’s not - it’s actually a lot of fun!

For anyone who would is interested in spending time to actually learn this well, I’ll share my path of how I got there. I first read The Linux Programming Interface to understand what it meant to “program for an operating system”. My previous experience at the time was mostly with Java or web technologies which hide the operating system from you. It also made me learn C really well by writing a myriad of programs. Next, I read Computer Organization and Design to get a good grasp of how computers work at the gate level. Finally, I was ready for this course (MIT 6.828).

Of course, this is only one of many possible paths. Make your own way, and most importantly, have fun doing it! Happy hacking!